SpeedFusion and the Internet Mix (IMIX)

Internet Mix (IMIX) is a measurement of typical internet traffic passing through network equipment, such as routers, switches, or firewalls. When measuring equipment performance using an IMIX of packets, performance is assumed to resemble what can be seen in real life.

The IMIX traffic profile is used in the industry to simulate real-world traffic patterns and packet distributions. IMIX profiles are based on statistical sampling done on internet routers. More information about IMIX can be found here: https://en.wikipedia.org/wiki/Internet_Mix

The IMIX Standard and the SpeedFusion Overhead

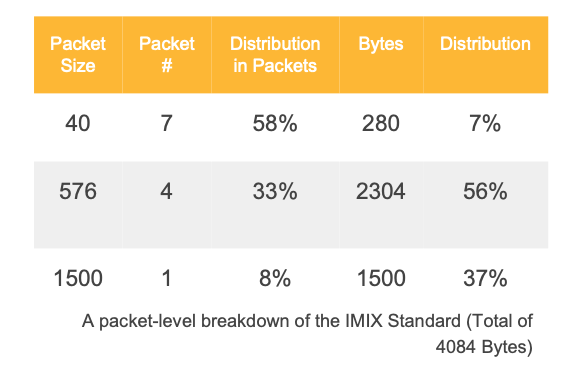

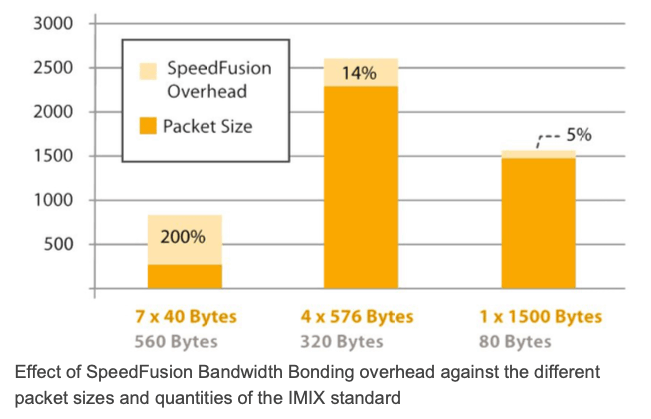

As the chart on the left shows, when a SpeedFusion VPN tunnel is used to transmit IMIX data (4084 bytes), an additional 960 bytes of SpeedFusion overhead is required.

The SpeedFusion overhead is 19% of the total transmitted data (IMIX + overhead). Since it uses a fixed number of bytes per packet transmitted (an additional 80 bytes), SpeedFusion Bandwidth Bonding is much more efficient when transmitting larger packet sizes. At packet sizes of 1500 bytes, SpeedFusion adds just 5% bandwidth overhead, but at packet sizes of 40 bytes, SpeedFusion overhead rises to 200%.